OpenAI's Surprise Move: The gpt-oss Revolution

August 6, 2025

GPT-OSS brings powerful AI capabilities directly to your personal devices

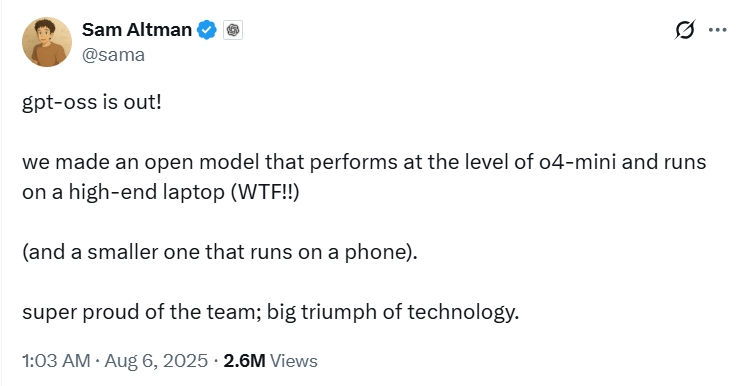

In a move that caught the tech world by surprise, Sam Altman, CEO of OpenAI, announced yesterday the release of gpt-oss, an open-source AI model that promises to bring ChatGPT-level intelligence to your personal devices. Yes, you read that right �?AI that runs on your laptop, not in the cloud.

Sam Altman's announcement sparked immediate reactions from the global developer community

What Exactly is gpt-oss?

Think of gpt-oss as OpenAI's gift to the world �?a powerful AI assistant that doesn't need an internet connection or expensive cloud services to work. According to Altman, this model performs at the level of "o4-mini" (one of OpenAI's advanced models), but here's the kicker: it can run on a high-end laptop.

Even more impressive? There's a smaller version that can allegedly run on your smartphone. Imagine having ChatGPT-like capabilities in your pocket, working entirely offline.

The Hardware Question Everyone's Asking

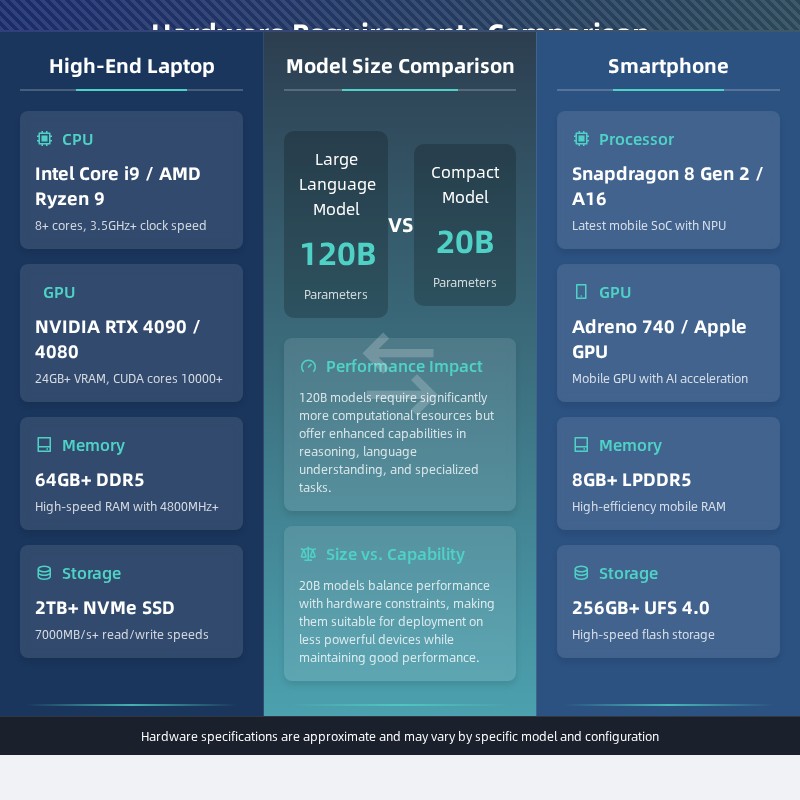

When Altman says "high-end laptop," the tech community immediately started asking: "How high-end are we talking?"

Hardware requirements comparison: High-end laptop vs smartphone capabilities for running gpt-oss models

The announcement sparked some humorous exchanges. One user, Emily, sarcastically asked if Altman was referring to laptops equipped with H100s (which are $30,000 data center GPUs, definitely not laptop material). Another speculated about needing next-generation graphics cards that don't even exist yet.

Based on community discussions, here's what we're likely looking at:

- Large model: Possibly 120 billion parameters, requiring a laptop with a powerful GPU

- Mobile version: Around 20 billion parameters for phones

One skeptic pointed out that "nobody is running 20B models on phones right now," highlighting the ambitious nature of these claims.

Why Go Open Source?

This is where things get interesting. OpenAI has traditionally kept its most powerful models behind a paywall, so why the sudden generosity?

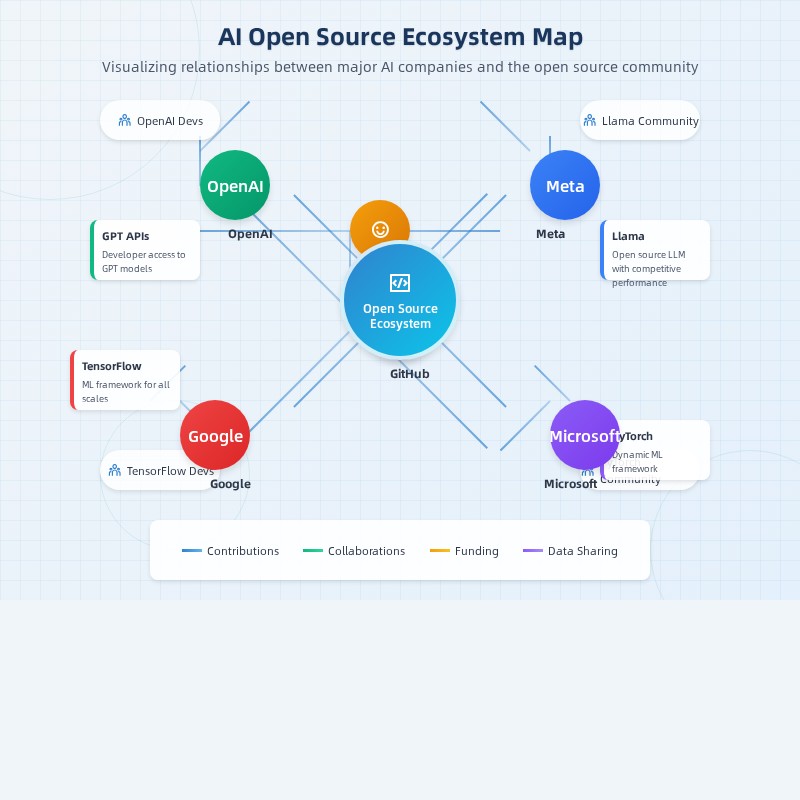

The AI open source ecosystem: Major players and their contributions to the community

The Competitive Landscape

Meta's Llama models have been gaining traction in the open-source community. Google has also been releasing open models. OpenAI risked being left behind in the open-source AI revolution.

Building an Ecosystem

Within hours of the announcement, major open-source projects were already discussing integration:

- vllm project for efficient serving

- Ollama for easy local deployment

This immediate community response shows why open source matters �?it creates an army of developers who will build tools and applications around your technology.

A New Strategy?

This could signal a shift in OpenAI's business model:

- Keep the cutting-edge models (GPT-4, o1) as premium products

- Release slightly older but still powerful models to the community

- Maintain relevance and mindshare while still monetizing the latest tech

What This Means for You

If Altman's claims hold true, we're looking at a fundamental shift in how we interact with AI:

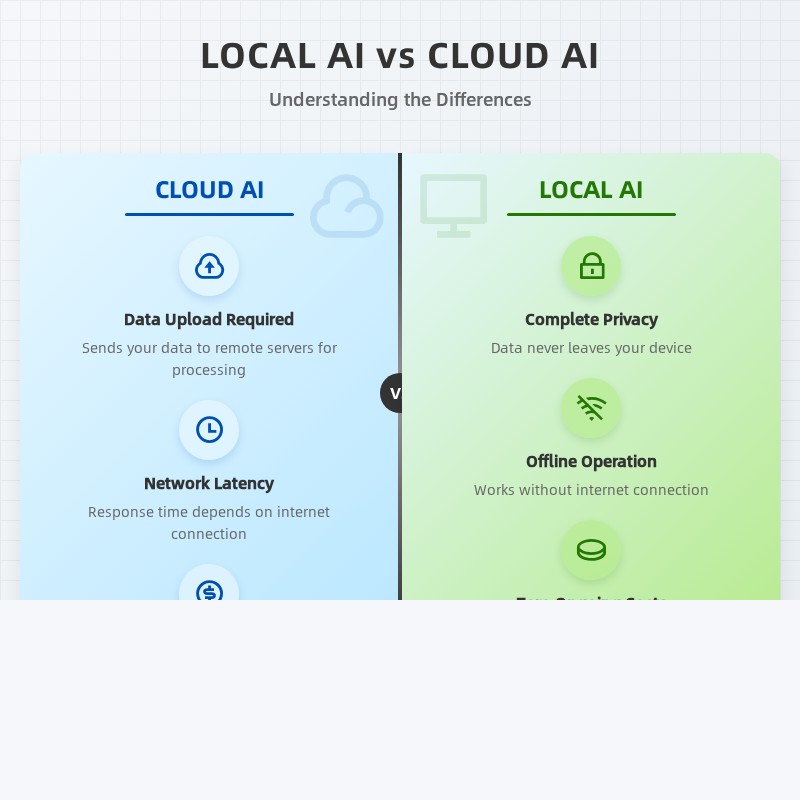

Local AI vs Cloud AI: Understanding the key differences and benefits

- Privacy: Your conversations stay on your device

- Accessibility: No subscription fees or API costs

- Reliability: Works without internet connection

- Speed: No network latency

The Reality Check

Before you get too excited, remember that we're still waiting for:

- Actual system requirements

- Real-world performance benchmarks

- Confirmation that this isn't just Silicon Valley hype

The tech community's skepticism is warranted. Running a 120B parameter model on a laptop or a 20B model on a phone pushes the boundaries of what we thought was possible in 2025.

The Bottom Line

Whether gpt-oss lives up to the hype or not, Altman is right about one thing �?this represents a "major technology win." The mere possibility of running ChatGPT-level AI on personal devices shows how far we've come.

As one commenter noted, this announcement generated "real-time reactions from developers worldwide." The excitement is palpable, even if tempered with healthy skepticism.

Stay tuned as we learn more about what "high-end laptop" really means and whether your phone is about to become a lot smarter.

What do you think? Are you ready to run AI models locally, or do you prefer the convenience of cloud-based services? Let us know in the comments below.